The recent Indian elections witnessed the unprecedented use of generative AI, leading to a surge in misinformation and deepfakes. Political parties leveraged AI to create digital avatars of deceased leaders, Bollywood actors

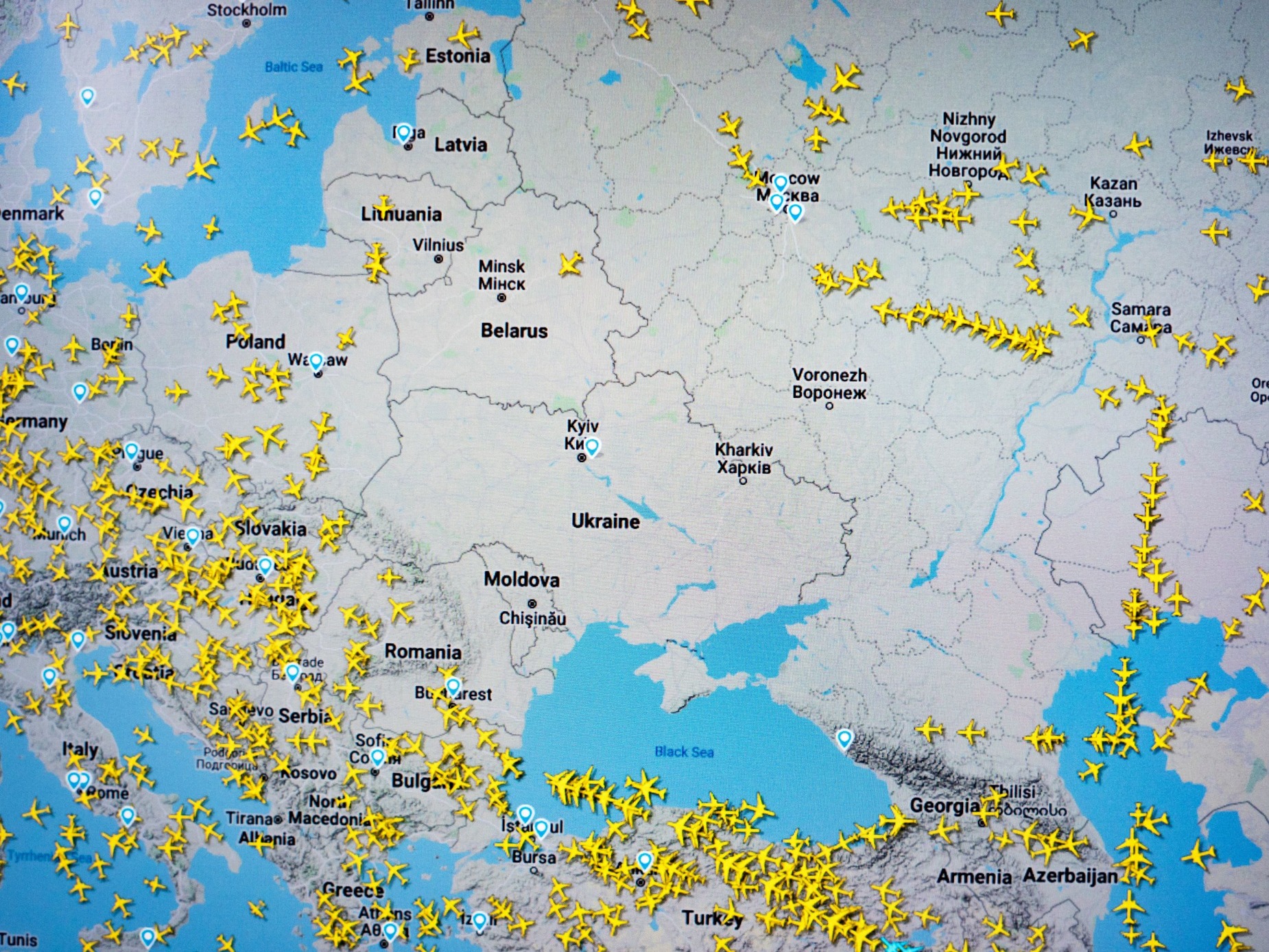

The world's most extensive democratic exercise concluded as India's incumbent ascended to reign for another term. Besides being the biggest democratic movement, this was India's first post-generative AI elections. And the impacts were profound as the truth evaporated and emulsified with deceit. The Indian voters were inundated by a deluge of misinformation and counterfeit media synthesised using AI. Politicians' doppelgangers, voice impersonations, deceptive editing and much more blitzkrieged social media. The integrity of democratic discourse was put to the test as voters grappled with distinguishing fact from fiction in an unprecedented onslaught of AI-enabled disinformation.

Manipulated facts and mendacity are long-standing issues in elections, but the rise of generative AI has introduced a new dimension of complexity and efficiency to the spread of misinformation. In India, this technological shift profoundly affected voter perception and electoral integrity. AI-generated misinformation, particularly deepfakes and manipulated media, has emerged as a significant challenge, exacerbating cognitive biases and complicating the democratic process. According to Adobe' "Future of Trust Study for India", an overwhelming majority of Indians, 86 per cent, harboured concerns that the spread of misinformation and deceptive deepfake content could significantly impact and undermine the integrity of future electoral processes in the country.

Misinformation Outbreak in India

In a bizarre turn of events, deceased politicians were seen soliciting votes for their political parties. During recent electoral campaigns, political parties — Dravida Munnetra Kazhagam (DMK) and All India Anna Dravida Munnetra Kazhagam (AIADMK)—leveraged AI to create digital avatars and voice reproductions of their deceased leaders, M. Karunanidhi and J. Jayalalithaa. These digital representations were used to deliver speeches and endorsements, playing on the emotional connections that voters had with these iconic figures. The use of such avatars raises ethical questions about consent and the manipulation of public sentiment.

The misuse of AI extended far beyond mere impersonations of politicians. Viral videos surfaced featuring startlingly realistic AI-generated personas of prominent Bollywood actors Aamir Khan and Ranveer Singh. In these deepfakes, the digital doppelgangers unleashed scathing rebukes against Prime Minister Modi's tenure, accusing him of failing to fulfil key campaign promises and inadequately addressing the nation's pressing economic challenges over his two terms in office. Both actors have vehemently disavowed the fabricated videos and filed police complaints regarding the malicious use of their likenesses through AI synthesis. The dissemination of such deceptive content stoked further confusion and undermined the integrity of the electoral discourse, especially in a country like India, where Bollywood is more sentimental than mere entertainment.

AI also enabled parties to achieve hyper-personalization. Prime Minister Modi was seen personally addressing individual Indian voters by name. The precision targeting enabled by generative AI has allowed campaigners to create tailored avatar videos or audio calls in any of India's myriad regional languages and dialects, facilitating a more direct connection with diverse voter demographics. However, the lack of clear sourcing for these personalised communications has raised concerns about the potential for large-scale voter manipulation and erosion of trust in the electoral process.

Another alarming attempt to manipulate voter perception came to light, involving a sophisticated AI-driven influence operation orchestrated by the Israeli political campaign management firm STOIC. Dubbed "Zero Zeno" by OpenAI, this covert operation highlights the growing threat of AI-generated misinformation in democratic processes globally. The operation was first uncovered by OpenAI, which identified a network of AI-generated misinformation targeting the Indian elections. STOIC's activities involved creating and disseminating content critical of the ruling Bharatiya Janata Party (BJP) while promoting the opposition Congress party. According to OpenAI's report, the campaign generated misleading comments and articles, which were distributed across various social media platforms such as Facebook, "X" formerly known as Twitter, and Instagram.

The revelation of the Zero Zeno campaign sparked significant political reactions in India. The BJP condemned the interference, highlighting the dangers of foreign influence on the country's democratic processes. The Union Minister of State for Electronics and IT, Rajeev Chandrasekhar, emphasised the need for thorough investigations into such activities to safeguard the integrity of elections. He resorted to X, stating, "It is absolutely clear and obvious that BJP was and is the target of influence operations, misinformation and foreign interference, being done by and/or on behalf of some Indian political parties. This is a very dangerous threat to our democracy. It is clear that vested interests in India and outside are clearly driving this and need to be deeply scrutinised, investigated and exposed.”

In addition to these grave and direct attempts at AI's employment, the social media of Indian users is saturated with deepfakes disguised as merriment. These deepfakes featured Indian Prime Minister Narendra Modi in Bollywood-style performances — vibrant dance routines and singing popular songs. They seem to have been used to humanise the leader and make him appear more relatable and charismatic to the electorate.

One of the key impacts of these deepfakes is their potential to influence younger voters. AI expert Muralikrishnan Chinnadurai noted that such content could make Modi seem like a more approachable and softer leader, which can resonate particularly with younger, more digitally savvy voters who might be more impressed by this novel use of technology.

Machinery behind Misinformation

The demand for AI-generated media was well met by the supply. The wide requirements and consumption of AI-enabled media led to the blossoming of a million-dollar industry in India. There are well-organised organisations that employ AI to yield political content at the request of political parties. This demand for AI content is so high that these organisations are swamped with contracts and have to often resort to external resources to meet deadlines.

“We have hired people who have previously worked in [the film] industry in VFX [or] CGI... for high-quality output and fast delivery,” Nayagam, who has delivered AI media to multiple political parties, told Rest of World. He further said, "Each candidate is willing to spend over a million rupees [$12,000] on using AI technology for their election campaigning.”

Cognitive Impact on Voter Perception

While this AI-manufactured information might seem trivial at first glance, its impacts are profound. Research depicts that one of the primary cognitive biases that AI-generated misinformation exploits is confirmation bias. Voters tend to favour information that confirms their pre-existing beliefs and attitudes. AI systems can generate tailored misinformation that aligns with individual biases, reinforcing false narratives and entrenching political polarisation.

The sheer volume of AI-generated misinformation contributes to cognitive overload. Voters are bombarded with information, making it challenging to process and evaluate the credibility of each piece. This overload can lead to heuristic processing, where individuals rely on cognitive shortcuts rather than critical analysis, increasing their susceptibility to misinformation.

AI-generated misinformation can undermine trust in legitimate news sources. When voters encounter conflicting information, their trust in reliable media diminishes. This erosion of trust complicates the task of distinguishing between credible and false information, further distorting voter perception.

Combating AI Misinformation

It is clear that the impact of AI-generated fake media on voters is paramount. This makes it imperative to establish methodologies to mitigate such malpractices and enable voters to segregate the truth from a heap of deceit quickly. And efforts are already in place. Meta, the parent company of Facebook and Instagram, has recognised the profound influence that social media can have on elections. Given the increasing sophistication of AI-generated content, Meta has implemented several strategies to curb misinformation.

In regard to the Indian elections, Meta stated, "We have made further investments in expanding our third-party fact-checking network in India ahead of the General Elections 2024. We have added Press Trust of India’s (PTI) dedicated fact-checking unit within the newswire’s editorial department to our already robust network of independent fact-checkers in the country. The partnership will enable PTI to identify, review and rate content as misinformation across Meta platforms."

In an effort to combat the rising tide of AI-enabled disinformation, OpenAI, the company behind the language model ChatGPT, has developed a deepfake detector tool specifically designed for disinformation researchers. This tool is primarily built to detect images generated by OpenAI's own popular image generator, DALL-E. While the company claims that the detector can accurately identify 98.8 per cent of images created by its latest model, DALL-E 3, it acknowledges that the tool's capabilities are limited when it comes to detecting synthetic media generated by other prevalent AI models like Midjourney and Stability AI.

Although present-day deepfake detection systems are not sophisticated enough to surpass the rate of spread of AI misinformation, multifaceted efforts are in place. Several startups and businesses have emerged that are undertaking measures in this direction. Zohaib Ahmed, the founder and CEO of the AI firm Resemble AI, says, "By distributing and seamlessly integrating deepfake technologies into widely-used platforms, as seen in today's phone spam filters, we can protect more voters regardless of their own resources or knowledge in this area."

However, the role of the public cannot be stated enough. Empowering citizens to identify and report misinformation is crucial. Efforts to enhance digital literacy and critical thinking skills among the populace are fundamental to developing a penetrative vision through the AI haze.

Neil Sahota, a leading AI Advision to the UN says, "Educating the public about the tactics used in digital manipulation, how to identify credible information sources, and the importance of critical thinking is essential in building resilience against misinformation."

The ascent of generative AI has put the democracies of the globe to an uncharted test. While the Indian elections have concluded, the clamour created by AI has surely left a precedent for the world to contemplate. It is now crystal clear that the only custodian of the singular truth is an informed citizen.