We’ve all been amazed by new advances in AI for news rooms. But we must also focus on ensuring its ethical use. Here are some concerns to address

In previous articles about using AI in journalism, we looked at its prospects and promises. Now, it’s time to venture into the dark side.

Most of the concerns regarding the use of AI for editorial content stem from two issues: a) how inaccessible these systems can be to ordinary people and the untrained eye, and b) how easy it is to misuse technology to cause real harm.

Read more:

What is ChatGPT and why is it important for journalists?

AI in the newsroom - how it could work

How to prompt ChatGPT effectively

What happened when I asked ChatGPT to write my article

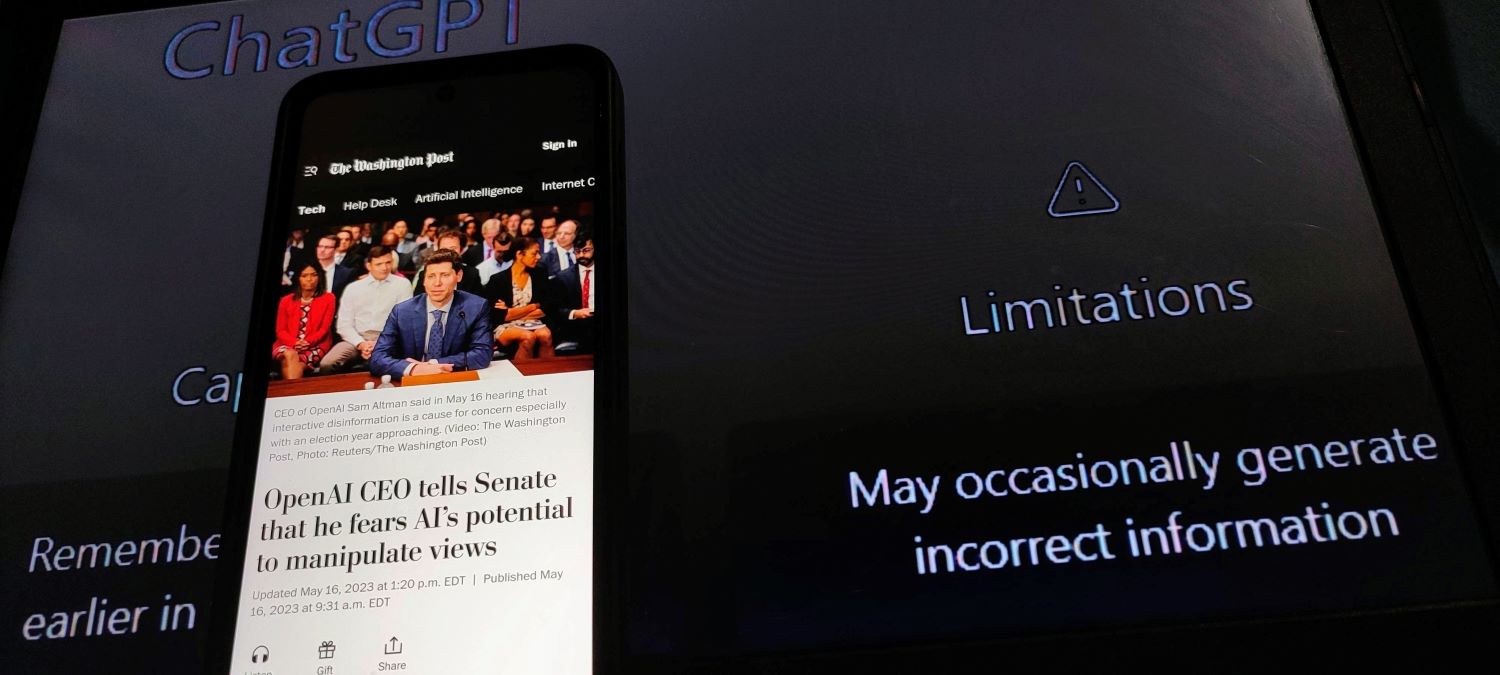

We are in the early days of using technology such as ChatGPT for news, and while we have been following the technological advances, we are still missing a solid framework in the form of legal and ethical guidelines and regulations for its use in news rooms. I am sure there is work being done on that.

So, here are some concerns regarding AI for news that I have identified. As systems change constantly, some of the following may be solved soon or they could be amplified as we use it more and more.

Bias

The problem: Biases may emerge from how AI systems are built, how their algorithms are tuned and from the data used to train them. Imagine an AI-powered news outlet whose algorithm consistently recommends and promotes news articles that align with a specific political ideology, ignore differing perspectives, eliminate opposing voices or reinforce stereotypes.

What can be done: Openness about training data and methods is essential. This will eventually be enforced with regulations. But it can also be managed with a human eye that edits AI work, preventing biased material from reaching the audience. The technology is still unreliable for unfiltered publication. But here’s another angle: AI could be used to identify biases in human news reporting.

Accuracy

The problem: Editorial work relies on accurate and reliable news reporting. While checking facts, AI systems may misidentify inaccurate information (satire, for example) as credible news or fail to give context.

What can be done: Fact-checking will get much better as AI systems get “smarter”, their algorithms are refined and they are trained in better datasets. Combining the strengths of human journalists and artificial intelligence would mean they could complement each other’s skills in verification.

Transparency

The problem: It’s still early days for using tools with these sorts of capabilities and it’s tempting to dive in and adopt AI in the newsroom without fully understanding how it operates, its decision-making processes and the underlying algorithms.

What can be done: There is not a great deal that can be done at this stage, as AI is a multi-billion industry already, but the development of open-source AI could be a start towards trusting it for editorial use, combined with a legal and ethical framework.

One of the biggest advancements I’d like to see is “explainable AI” - a system that would provide understandable explanations for its decisions and allow users to comprehend how information has been selected and filtered.

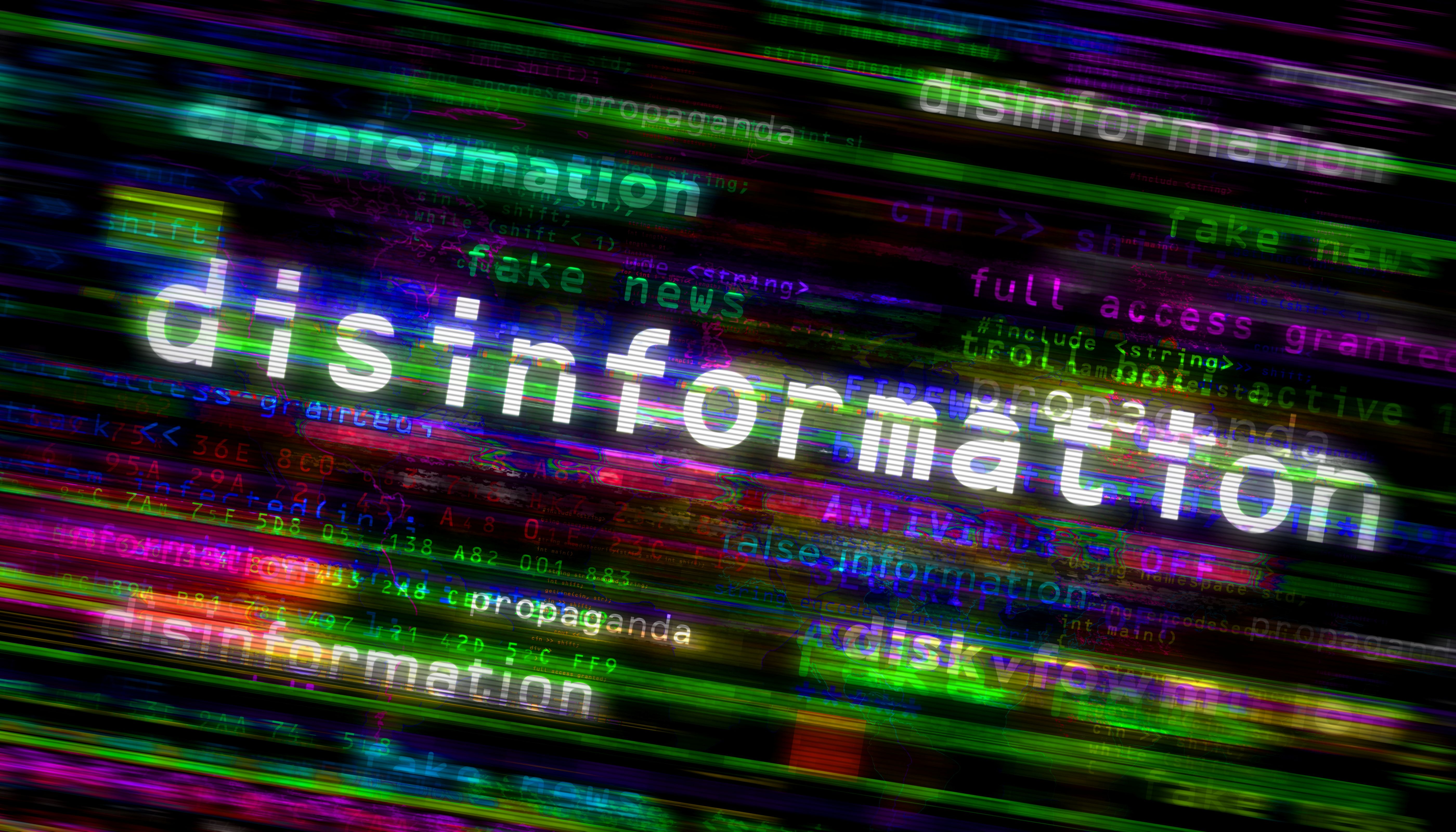

Misinformation

The problem: In these early days of AI, there have been some chilling displays of deepfake video and audio - and demonstrations of how easy it is to pull off something embarrassing and totally fake.

What can be done: Sure, technology can be used for good and evil. AI systems are good at creating deepfakes but they can also be great at detecting them. Image and video analysis, pattern recognition and natural language processing are boosted by artificial intelligence. It would be great to put these tools in the service of good journalism but before that happens, we need to educate the public about the dangers of fake AI-generated content.

Privacy

The problem: Having an AI serve us with recommendations and personalised content means we are handing over our data: browsing history, reading habits and personal preferences. This extensive data collection - and its storing, retention and sharing - raises concerns about the privacy and security of user information. And there is another layer to all this: have we signed off on using our data to train AI?

What can be done: This is not a new problem for digital news businesses. It would probably have to be treated with the same policies in effect today: data minimisation (collection of only necessary information), strong data encryption and access control, clear privacy policies and regular audits to assess compliance.

This is another case where transparency is needed: users must understand how the AI handles their data and give explicit consent at every step. They can opt out of giving away sensitive information.

Lack of human judgement

The problem: This refers to the limitations of AI systems to capture the emotion of a sensitive event or apply subjective judgement. It can result in tone-deaf reports or an insensitive portrayal of a situation.

What can be done: A hybrid approach, where humans and AI collaborate. Automating routine tasks to AI means journalists must oversee and intervene to ensure stories are treated properly. Users can play a role here, giving feedback and evaluating AI-generated content.

Job implications

The problem: Ever since the promise of AI in the newsroom was real, critics have expressed concerns about the potential loss of jobs for humans. Automation will certainly bring changes in the workplace, as has happened with several emerging technology tools throughout history.

What can be done: This is a major opportunity for journalists to improve their skills, invest in training programmes in skills needed to work alongside AI and adapt to the new roles created in the newsroom. There are editorial work areas in which AI is simply very efficient which can remain “manual”, and new workflows will also be created. In most of them, journalists will supervise algorithmic output, the type of which we have described extensively in previous articles - do read more! Humans and AI systems must collaborate and complement each other to produce editorial content.

Inclusivity and accessibility

The problem: As AI systems have a role in how we deliver news to various audiences, we need to make sure they are accessible to a diverse range of people and are not excluding any particular sets of users.

What can be done: Artificial Intelligence tools can help immensely with equal access and opportunity for engagement with news content and services. Features like text-to-speech, automated closed-captioning for video content, alternative formats, automatic adjustment of typography and colours and especially multi-modal interfaces for communication via text, audio and visual means.