In September 2018, Twitter launched the “Creating New Policies Together” survey with the aim of producing an updated content policy. As part of this updated policy, “language that makes someone less than human” – even where no specific person is targeted – was added to the website’s definition of hateful content.

Explaining its choice of “dehumanization” to describe offensive tweets, the website referenced a study connecting language of this kind with violence. According to the study, using descriptions that deny someone’s humanity makes some people more willing to accept the use of violence against them.

As a result, Twitter proposed banning dehumanising language. After more than 8,000 people participated in the survey, religious groups were added to the list of those protected by the content policy, meaning that the ban was extended to any content insulting individuals on the basis of their religious beliefs.

Between insulting religions and government propaganda

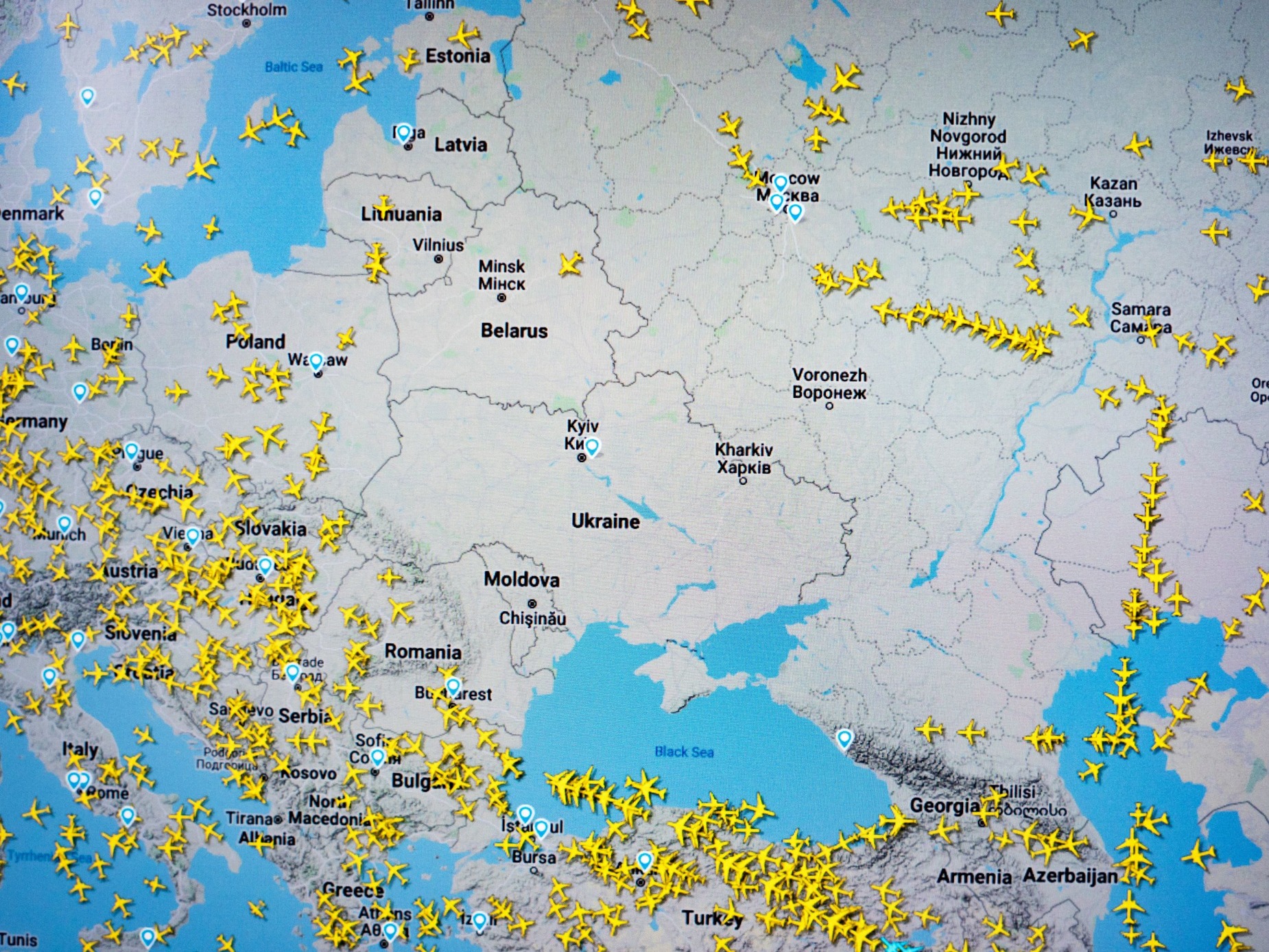

Like other social media platforms, Twitter has been accused of not doing enough to combat incitement and is often blamed for growth in anti-refugee and anti-minority discourse. The recent rise of the far right in Europe and the USA has been reflected by a noticeable growth in the number of tweets warning of the dangers posed by refugees and immigrants and their effect on the demography of western countries.

Twitter did nothing about these tweets – despite the fact that it zealously enforces its policies against accounts opposed to the West and Western policy, those that support “resistance” movements, and even those that spread what is generally considered to be government propaganda (most recently accounts used by China during the protests in Hong Kong).

So if Twitter has decided that it has the capacity and the technology to combat any offensive content, why is it so much more forgiving of some extremist tweets than others?

Reporting content

More than 500 million tweets are published on Twitter every day in the world’s various languages, among them thousands of tweets that violate the website’s policies. According to a transparency report, within one six-month period more than 3.6 million tweets and users were reported, and 600,000 accounts were found to be in violation of its rules. Twitter only censors content or removes tweets – even those that violate its policies – after a specific tweet or user is reported. These reports are handled by a content review team, whose content moderators inspect the tweet and the reason for the report before deciding whether it should be deleted or not.

Once insulting religions was added to Twitter’s list of content considered offensive, the website announced that tweets which violate the new policy but were published before the policy came into effect would only be removed if they were reported. As a result, any account can be removed if older tweets are reported for insulting the followers of a particular religion, LGBT people, or any of the protected groups provided for by the content policy – even if they did not violate this policy at the time of writing.

But in an ever more extreme world, and with easily offended users, thousands of accounts and tweets are reported to Twitter every day. So who decides exactly how to apply the website’s policies?

Content moderation companies

In the world of social media, policies are made and applied by different people. Most websites outsource content moderation to other companies such as Accenture, TaskUs, or Cognizant. These companies hire employees from across the globe and provide them with lists of banned content for different websites.

Their moderators sit at a computer screen for 8 or 9 hours a day, reviewing around one thousand tweets and messages in a single session according to a report by the Washington Post. This gives them on average less than a minute to form a judgement on their content. Twitter entrusts the enforcement of its own flexible content policy – and the moderation of its hundreds of millions of tweets – to 1,500 Cognizant employees working in eight different countries.

The offensive content common on Twitter includes hate speech, sexual images, and even (on accounts associated with armed groups) depictions of human rights violations. Given this variety, those who review reports and decide what happens to tweets should be politically well-informed or experts on law and content policy. At the very least, they should have a good command of the language and the culture of the country in which the tweet was published. But the Washington Post report shows that moderators do not necessarily even speak the language used in the tweets they review. Interviews conducted by the newspaper with moderators from Manila revealed that they are responsible for content written in as many as ten languages they do not speak, and that they use Google translate when deciding whether or not to remove it. Neither do they have sufficient expertise to understand the background and context of the tweets they read.

Deleting tweets and suspending accounts without considering context has caused Twitter a lot of problems, most recently in August, when the English account of the Venezuelan president Nicolás Maduro was suspended for several hours before being reopened. Outside the realm of politics, some comedians have had their accounts suspended because of jokes or satirical tweets. Even more seriously, the policy of deleting content without paying attention to the context may have repercussions for criminal justice.

The ICC has ruled social media posts admissible as evidence for acts punishable by law, and in 2017 issued an arrest warrant for the Libyan commander Mahmoud Al Werfalli based on evidence published on social media websites. But changes in these websites’ policies and reporting of content documenting crimes and human rights violations have led to moderators deleting content that could serve as evidence of war crimes.

Reporting Trump

Moderators are not responsible for tweets published by public personalities or verified accounts, which are referred to senior Twitter employees.

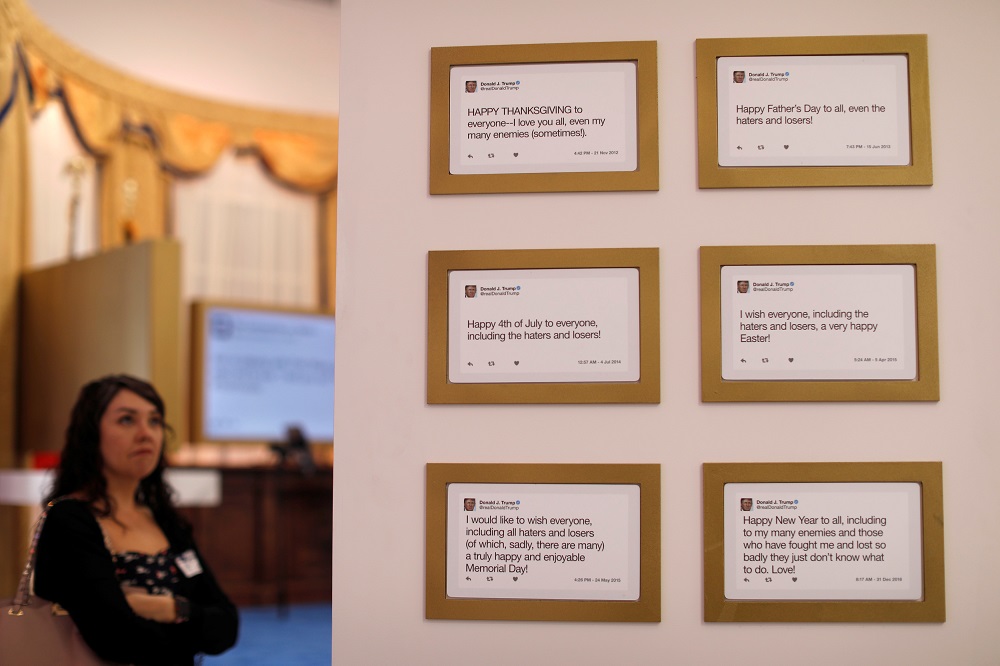

After repeated breaches of its content policy by US President Donald Trump and incidents of incitement against Hillary Clinton, journalists, the media, and eventually against Muslim members of Congress, Twitter was sharply criticised for its double standards. Until Trump was banned from Twitter in January 2021, no action was taken against Trump’s account or those of his supporters, even after tweets which accused ANTIFA of being a terrorist organisation. Meanwhile, despite rising numbers of attacks by white supremacist groups, Trump had not once demanded that such groups be added to the terrorist blacklist, nor even criticised them. This has only served to encourage other accounts who saw his anti-refugee tweets as a model to aspire to.

This forgiving attitude towards political personalities who stoke xenophobia and describe minorities as “occupiers” or “invaders” have forced the website to defend its policies, which it has explained in a blog post as based on “public interest”. The post explains that the website makes exceptions for tweets written by politicians or candidates for public office whose accounts are verified and who have more than 100,000 followers.

Although Twitter does not delete such tweets, it does attach a warning informing users that these tweets violate its content policy. It justifies this policy on the grounds that it provides a free space for public discussion and that it allows the public to respond to politicians directly – meaning that users, rather than the website, can hold them accountable for their tweets.

But this so-called space for public discussion does not only mean that politicians are free to breach hate speech policies. Exceptions also have to be made for their supporters. Trump’s tweet in which he told female members of Congress to “go back” to their “home countries” – which many considered to be a racist violation of Twitter policy – was safe from deletion, and the president was not held to account for it until his account’s deletion. But neither will those who retweet it to express their support for its content or write responses applauding it, because they are not themselves writing racist tweets.

Despite having publicly laid out its criteria for exceptions to its hate speech policy, and despite its claims that this policy is meant to create a healthy discussion between decisionmakers and the public, there are many other accounts not belonging to politicians which Twitter seems unwilling to ban. This is not simply because of their large numbers of followers, but also because Twitter bosses are eager to avoid being branded anti-conservative or incorrectly associated with a particular political strand of opinion. As one Twitter employee says, they do not want to be caught up in political polarisation.

Are public personalities the only exception?

Glorifying or promoting “terrorism” or violence is banned on all social media websites. But in the current global political climate, the borders between concepts have become blurred. After the rise of the so-called Islamic State, which made organised use of social media, thirteen of these websites came together to establish the Global Internet Forum to Counter Terrorism. Alongside Facebook, Alphabet (the mother company of Google and YouTube), Microsoft and Twitter, founding companies included the filesharing website Dropbox and Ask FM.

This forum has allowed social media websites to share information about accounts and content that incite terrorism and to create a common “protocol” on how to deal with it. Because these companies’ policies all define offensive content differently, they have adopted the UN Terrorist Sanctions List as a joint basis for their anti-terror activities. Through GIFCT, they have been able to share information and establish a database of banned content (“hashing”). This allows them to delete hashed content and ban users from all of their platforms simultaneously, according to criteria that are constantly updated based on new data. These criteria include glorification of any “terrorist” act as well as an attempt to define “terrorism” and differentiate it from liberation and self-defence movements.

GIFCT added the shootings at the Christchurch Mosque in New Zealand (the video and the statement released by the killer) to its database to prevent its dissemination. But they have not yet added any mass shootings in the US. However, the growing frequency of incidents of this kind – some of which target refugees – has led Facebook to add refugees to its list of protected groups, and has deleted content that promotes, supports or represents white supremacist movements, unlike Twitter, which continues to ignore white supremacist content and refuses to recognise it as a problem.

Politics or double standards?

Extremist groups or individuals with extremist tendencies do not have a monopoly on violence or incitement to violence. In fact, military and internal security campaigns are constantly being conducted by state armies or internationally-recognised forces. These campaigns are inevitably accompanied by both positive and negative opinion, by promotion and opposition. But state violence is exempted from Twitter’s content policy.

In the Arab-Israeli conflict, for example, promotion of the IDF is not covered by any content policy because it originates with a state. Promoting any action by Hamas, on the other hand, will be met with the full force of laws on the dissemination of terrorist material because it is categorised by the USA as a terrorist organisation.

Market democracy

Social media websites market themselves as platforms that encourage democracy and discussion and they claim to have created a space for free communication. In reality, however, they are commercial enterprises seeking to keep users online for as long as possible to maximise advertising revenue.

Like any other commercial company, these websites are subject to the laws of the country in which they operate. They are obliged to keep to the regulations imposed on them by each country or else risk losing their right to operate there. National laws are governed by politics and relationships of interest. However objective and unbiased social media websites may claim to be, a single Trump tweet or EU regulation can change all of the laws concerning content on Twitter – which is, after all, a company that will ignore or target particular content in order to keep share prices high.

*Title photo: In this photo illustration, the logo for Twitter is projected onto a man on August 9, 2017 in London, England. (Leon Neal-Getty Images)