This article was originally written in Arabic and translated into English using AI tools, followed by editorial revisions to ensure clarity and accuracy

How has artificial intelligence emerged as a powerful tool during wartime, and what strategies are fact-checkers adopting to confront this disruptive force in newsrooms? The work of fact-checkers has grown significantly more challenging during the genocide in Palestine, as the Israeli occupation has relied heavily on artificial intelligence to disseminate misinformation.

The Gaza Strip faces the devastation of an unprecedentedly intense war, shrouding the region in darkness. This is compounded by the spread of both misinformation and disinformation across digital platforms. Political and military leaders from the Israeli occupation contribute to a global storm of lies and manipulation, challenging the truth.

Over the past nine and a half months, hundreds of fake images, stories, news reports, and pieces of misinformation have flooded social media and gained global traction amid widespread coverage of the genocide initiated by the Israeli occupation on October 7, following Hamas's 'Al-Aqsa Flood' operation targeting settlements in the Gaza envelope.

Misinformation has become a major challenge in recent years, with even reputable media institutions and agencies falling prey to its spread. A notable example occurred when U.S. President Joe Biden claimed to have seen images of beheaded babies, following Israeli Prime Minister Benjamin Netanyahu’s allegation that Hamas fighters had beheaded 40 Israeli infants. Despite the lack of evidence from the Israeli occupation, fact-checking platforms failed to curb the widespread dissemination of this misleading claim, which significantly influenced international public opinion. Numerous examples from the war on Gaza, sourced from Israeli media and foreign outlets sympathetic to its narrative, as well as accounts dismissive of the suffering of Palestinians, illustrate a broader 'war of misinformation.' This battle is waged over awareness, narrative, and the power to define the roles of victim and aggressor. It employs tactics such as spreading false claims, stripping content from its original context, and manipulating or fabricating information.

The misinformation surrounding the war on Gaza confronts the world with one of the greatest fears associated with artificial intelligence: its capacity to falsify reality and its powerful potential to persuade and influence.

Fact-checking platforms have monitored hundreds of claims related to the ongoing war, yet many have not relied on traditional verification methods commonly used during previous Israeli assaults on Gaza. The current war of extermination has marked a turning point in the spread of misinformation, with artificial intelligence playing a central role. In journalism, AI is often discussed in terms of its potential to either replace journalists or enhance their work. As these tools gain traction in newsrooms, their capabilities, ranging from data search and extraction to verification, continue to expand. However, the same technologies also pose serious threats to accuracy and objectivity, having been used to manipulate reality and spread false or misleading narratives. This trend has become evident not only during times of crisis but also under normal conditions. Growing concerns about AI’s ability to deceive and influence public perception have become central to the efforts of fact-checkers. In an increasingly confusing information landscape, trust in the news has eroded, especially as media channels become saturated with speculation, disinformation, and unverified content.

Throughout the war on Gaza, Israeli media and official institutions employed an army of supporter accounts and fake accounts, exploiting one of the tools of the world of artificial intelligence, which are fake accounts driven by artificial intelligence, known as bots.

Artificial intelligence can, for instance, generate a strikingly realistic image of nature. This is an entirely fabricated scene with a convincing emotional impact. The same applies to synthetic voices or AI-generated images, especially when presented alongside the logo of a reputable media outlet. This tactic is clearly evident in the ongoing war waged by the Israeli occupation on the Gaza Strip, where advanced technologies are being employed as part of a sophisticated propaganda campaign, commonly referred to as "weaponized artificial intelligence."

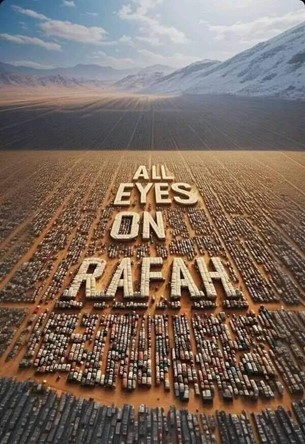

During the war, AI tools have been used in the media to produce compelling content, such as the widely circulated image “All Eyes on Rafah,” which garnered global attention and fueled international solidarity with the city. However, the same technologies have also been used to manufacture deceptive scenes, spread misinformation, and distort facts. The Israeli occupation, for example, has utilized AI to create content that aligns with its political and military objectives in Gaza. This dual capacity, both constructive and destructive, highlights the critical role AI plays in shaping the media landscape, particularly in times of conflict.

As the term suggests, "weaponised" reflects the widespread use and significant influence of artificial intelligence technologies in modern warfare. This use is not limited to the battlefield; it extends powerfully into the media sphere. According to the Israeli occupation army, high-tech systems played a crucial role in doubling military effectiveness during the 11-day assault in May 2021, known in Palestine as the "Sword of Jerusalem." At the time, Israeli military officials described it as the world’s first "artificial intelligence war." A similar strategy was employed during the war of extermination on Gaza that began at the end of 2023.

Throughout this conflict, Israeli media outlets and official institutions coordinated the deployment of both real and fake social media accounts. They exploited one of artificial intelligence’s key tools: bots, or counterfeit accounts powered by AI. These bots were programmed to post comments and articles supporting the occupation and undermining Palestinian rights across various platforms, particularly Facebook, X, and Instagram.

On May 29, Meta reported the removal of a network of hundreds of fake accounts linked to an Israeli company named STOIC, based in Tel Aviv. These accounts, driven by AI, were used to amplify Israeli propaganda and disseminate false claims, especially targeting Arabic-speaking audiences. A day later, on May 30, OpenAI, the developer of ChatGPT, announced that it had banned another group of accounts operated by the same company. These accounts had used AI to impersonate Jewish students and African American citizens in an effort to make their messages appear authentic and diverse.

On May 29, Meta reported the removal of a network of hundreds of fake accounts linked to an Israeli company named STOIC, based in Tel Aviv. These accounts, driven by AI, were used to amplify Israeli propaganda and disseminate false claims, especially targeting Arabic-speaking audiences.

Beyond fake accounts, images and videos emerged as the most potent tools of AI-driven misinformation. Visual media plays a powerful communicative role, often surpassing the impact of words. These images and clips are specifically crafted to shape perception and influence emotion, making them the preferred medium for spreading fabricated stories. Their increasing sophistication poses a serious challenge to fact-checkers, who lack adequate tools to consistently distinguish between genuine and manipulated content.

The Israeli occupation made extensive use of visual material circulating on social media. However, this alone was not enough to elicit global sympathy or successfully portray Israel as the victim and Palestinians as the aggressors, especially as more documentation of Israeli military crimes surfaced. From the outset of the conflict, Israel deployed a new form of warfare focused on information and perception. This strategy aimed to steer international public opinion, strengthen its domestic front, and undermine Palestinian unity through psychological and media manipulation.

These three objectives, which include shaping international opinion, strengthening domestic morale, and weakening Palestinian unity, were reflected in an image shared by Israeli and pro-Israel accounts on Facebook at the beginning of the war on Gaza in October 2023. The image showed an Israeli soldier holding two infants, accompanied by claims that he had rescued them from Hamas fighters in Gaza. Despite its emotional appeal and widespread circulation, the photo was later revealed to be artificially generated. A basic visual examination revealed clear signs of manipulation, most notably that the soldier appeared to have three hands.

On December 9, 2023, Israeli accounts on the X platform circulated another image that depicted Israeli soldiers standing in front of a destroyed building in Gaza. The soldiers appeared to be celebrating, arranged in a way that resembled a Hanukkah menorah, with a six-pointed Star of David drawn on one of the stones in the rubble. Technical analysis confirmed that this image was also generated using artificial intelligence, as numerous visual inconsistencies and distortions indicated it was not genuine.

The Palestinian Observatory for Fact-Checking and Media Literacy, known as Tahaqaq, has monitored a wide range of misinformation involving artificial intelligence during the war. One notable case involved a video clip that appeared to show American model Bella Hadid condemning the October 7 attack and expressing support for Israel. The video was widely circulated in Israeli and international media. However, Tahaqaq's investigation found that the footage was taken from a 2016 awareness event hosted by the Global Lyme Alliance. The voice had been cloned using artificial intelligence, and the video was manipulated as part of a broader disinformation campaign. This case underscores the urgent need for stronger technological tools and collaborative efforts to counter the spread of media misinformation.

Another form of misinformation that the Observatory team has addressed is what can be described as "fake sympathy." This type originates from Palestinian or pro-Palestinian accounts, where users aim to support the Palestinian narrative by sharing images or video clips found online, often unaware that the material has been manipulated using artificial intelligence. Detecting such falsification is challenging, even for professional fact-checkers. As a result, ordinary users who are actively seeking content that aligns with their beliefs or reinforces their stance are especially vulnerable to these deceptive materials.

One example of this occurred in late October 2023, when a photo was widely circulated on social media. It appeared to show a camp with tents displaying the flags of the occupying state of Israel, suggesting the displacement of settlers from northern and southern areas in response to resistance strikes. However, a technical analysis revealed numerous visual distortions that confirmed the image had been generated using artificial intelligence.

Another example involves a video clip that appeared to show Israeli National Security Minister Itamar Ben-Gvir acknowledging that an Iranian attack on Israel had destroyed two air bases and resulted in the deaths of several Israeli soldiers. At first glance, the video seemed credible and was shared widely across social media platforms. However, upon closer examination, it was found to be manipulated. The voice in the video had been artificially cloned using advanced audio synthesis technology, and the accompanying subtitles were crafted to match the fabricated audio. This deliberate alignment between the fake voice and the misleading translation further increased the clip's perceived authenticity, making it more difficult for viewers to detect the falsification.

The promotion of such claims by Palestinian accounts was exploited by Israeli media and its supporters in a counter-narrative strategy. They argued that Palestinians were fabricating their suffering, thereby casting doubt on the credibility of the Palestinian narrative surrounding the Israeli war of extermination on Gaza. This approach was prominently featured in systematic media campaigns under labels such as "Pallywood" and "Gazawood." However, the sporadic and uncoordinated nature of Palestinian content cannot be classified as the deliberate use of artificial intelligence as a weapon. Unlike the Palestinian publications, the Israeli side has engaged in a continuous and coordinated stream of claims, supported by official and well-organised media institutions.

The war on Gaza has amplified global concerns over the dangers posed by artificial intelligence and underscored the urgent need for responsible governance of its use. The dark side of this advanced technology has been revealed, not only through its potential to aid in physical violence but also through its power to manipulate perception and shape public opinion. While AI is being actively used as a weapon within the evolving landscape of disinformation warfare, it remains a double-edged sword. It possesses immense potential for documenting events, raising global awareness, drawing international attention, and fueling solidarity movements. These contrasting capabilities bring to light the profound ethical and legal challenges surrounding the use of artificial intelligence in conflict.

Given the widespread misuse of AI technologies during the war on Gaza, the consequences of this ongoing information war remain uncertain. What is clear, however, is that the Israeli occupation, with its technological advantage, has played a central and dominant role in shaping this evolving battlefield.