This article was originally written in Arabic and translated into English using AI tools, followed by editorial revisions to ensure clarity and accuracy.

How did the conversation about using artificial intelligence in journalism become merely a "trend"? And can we say that much of the media discourse on AI’s potential remains broad and speculative rather than a tangible reality in newsrooms?

The relationship between artificial intelligence technologies and journalism in 2024 calls for a critical analysis. The ongoing attention and debate on this topic partly highlight the significant influence of major tech companies over media narratives. This dynamic often shifts the balance of power in favour of these corporations and the nations they represent.

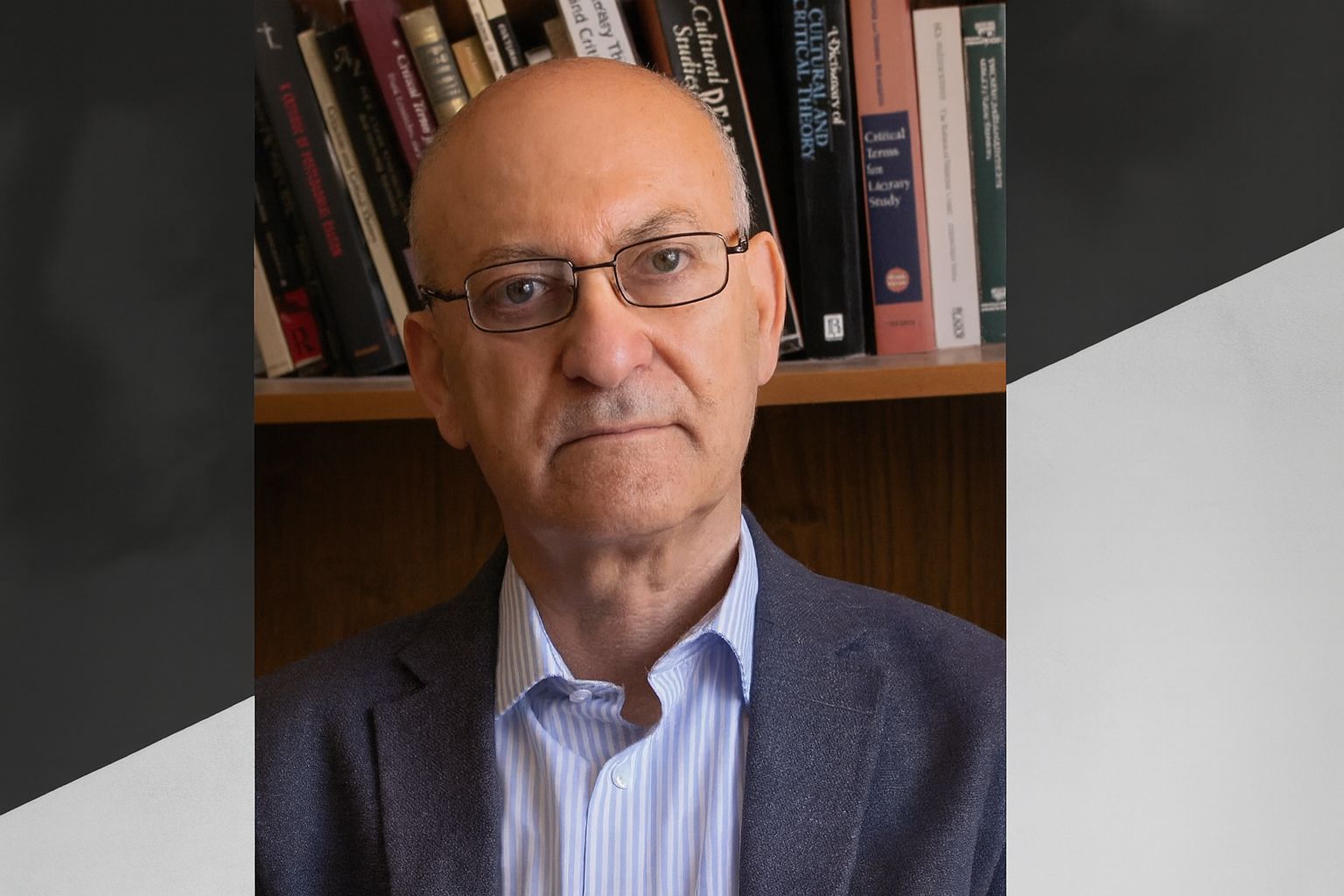

This observation was expressed in an article by American technology critic Parmy Olson last October titled “Big Tech Has Our Attention — Just Not Our Trust,” in which he explained how the big players in Silicon Valley could paint a dreamy, imaginary picture of what the future should look like thanks to artificial intelligence, making promises about “prosperity promised to humanity.". However, this prosperity has remained limited to a few dominant companies from a single country, with a market value exceeding 8.2 trillion US dollars.

The big players in Silicon Valley can paint a dreamy, imaginary picture of what the future should be like thanks to artificial intelligence, and to make promises about “prosperity promised to humanity,” but it is a prosperity that has remained limited to a few dominant companies from one country, with a market value exceeding 8.2 trillion US dollars.

The Illusion of AI-Driven Journalism

It can also be said that much of the talk that is being raised today in the media about the potential to benefit from artificial intelligence technologies, and its generative aspect in particular, is still more general and imaginary than tangible realities that journalists can experience in a way that makes it accurate to say that these technologies will change the future of the profession for better or worse. Although there is no evidence to support this general claim in the Arab case, surveys and reports issued by Western organisations and institutions provide what helps us form a more balanced picture regarding this enthusiasm for artificial intelligence.

According to a report by the Tow Centre for Digital Journalism, conducted in collaboration with Oxford University and the Oxford Internet Institute, the role of artificial intelligence in newsrooms is still limited to performing “mundane” tasks. The report also confirms that artificial intelligence “has not proven to be the most effective quick fix for journalists in many cases,” especially in the editorial aspect. The efficiency of artificial intelligence applications largely depends on the nature and context of the task, as well as the skills and limitations of those using them. These limitations include scepticism about the reliability of AI outputs, concerns among journalists about harming their professional reputation through over reliance on AI, and the inherent challenges of automating specific journalistic tasks. As highlighted in the report, these factors significantly impact AI's perceived effectiveness in journalism.

It can also be said that much of the talk today in the media about the potential to benefit from artificial intelligence technologies, and its generative aspect in particular, is still more general and imaginary than tangible realities experienced by journalists in a way that makes it correct to say that these technologies will change the future of the profession for the better or worse.

Other contextual obstacles prevent the expansion of artificial intelligence applications in the field, including the resistance of journalistic tradition to the encroachment of technology in a work that is essentially humanly in nature, such as journalism, in addition to the lack of development of legislative frameworks and codes of conduct regulating the safe use of these technologies, and the weakness of technical infrastructure and skills that prevent the adaptation of existing models to serve journalists.

The Power Imbalance: Journalism vs. Big Tech

The main issue here is neither journalistic nor technical in its final procedural form when using the product provided by artificial intelligence companies, but rather relates to the state of non-equal dependence between these companies and their products and journalism institutions. This dependency narrows even the imaginary margin of enhancing work efficiency competitiveness and reducing costs, especially for independent journalism projects, according to Imad Rawashdeh, a Jordanian journalist and executive editor of the independent website Almurrassel (The Reporter). Rawashdeh believes that generative AI platforms are tools that facilitate aspects of journalistic work and break with the antagonistic relationship in search engines, where control over results and difficulty in accessing relevant information are present.

However, the same problem will appear in artificial intelligence tools that can reproduce biases purely for profit and/or political reasons. Therefore, Rawashdeh sees the need to raise the necessary amount of legitimate critical questions in journalism regarding dealing with the products of significant technology companies, which almost dominate journalistic publishing spaces. He also sees the need to look at them first as monopolistic models that follow political projects with specific agendas.

Generative AI platforms are tools that facilitate aspects of journalistic work and break with the negative relationship in search engines, where control over results and difficulty in accessing relevant information, but the same problem will appear in AI tools that are capable of reproducing biases purely for profit and/or political reasons.

AI and the Future of Journalism: A Tool or a Trap?

At the level of journalistic institutions themselves, another logic governs the best way to approach and deal with these technical developments, which is a logic that, according to the Tow Centre report, stems from a central question:

Can we solely rely on technology companies that provide artificial intelligence services? In other words, can we rely on them and submit to their costs and terms of use? And can we believe that they develop their products in a way that serves the profession of journalism and its workers?

Journalists’ answers to this question revealed a genuine concern about the toxic relationship between journalism and technology companies, as the disparity continues to escalate between those who own the technology and the capabilities to develop it and those who are forced to limit themselves to harmful use of it. For example, major media outlets such as the New York Times have enormous potential to invest in developing internal AI software that suits their needs and benefits from their vast journalistic archives and digital and technical infrastructure. They legally pursue any company that tries to seize their content to train generative models, as they recently did with OpenAI. Smaller institutions will remain subject to what technology companies provide, each according to its financial and technical solvency, which hinders their independence and limits their chances of survival in a new environment of technical competition, not to mention the exploitation of these technology companies of their content and archives and benefiting from them to enhance the power of their models.

This aspect of the threat is illustrated by Felix Simon, a researcher at the Oxford Internet Institute and supervisor of the report, who warned in a recorded interview with Alan Rusbridger, editor of the British magazine “Prospect Magazine,” of what he called the “technical infrastructure trap,” where new technologies imposed from above and coming from the West become an inevitable fate for journalists around the world to keep up with, without considering the impact of this on journalistic content and its quality, the contexts of journalists’ work, their priorities and their role in the public sphere in different political and economic circumstances. Simon recalls the recent manifestations of this unbalanced relationship between the two sectors, which we see on social media platforms, controlling news content through their algorithms and marginalise it in favour of content that is more capable of attracting the audience and stealing their attention, a practice whose effects may be exacerbated by artificial intelligence technologies in the future.

Today, those working in journalism are interested in artificial intelligence, driven by its capabilities in generating texts or editing and proofreading them, processing vast amounts of data, improving the performance of specific products according to audience preferences, or modifying subscription and payment models. However, all of these capabilities have not reached any significant level of prevalence at the level of journalistic institutions in general, especially in many countries of the Global South, where journalism suffers from major dire threats - in the face of undemocratic authorities or brutal military occupation as in Palestine - which is a very sensitive reality that is supposed to set a ladder of priorities that is not at the top of its list the enthusiastic evangelism of artificial intelligence technologies and the urging to adopt them.

![Palestinian journalists attempt to connect to the internet using their phones in Rafah on the southern Gaza Strip. [Said Khatib/AFP]](/sites/default/files/ajr/2025/34962UB-highres-1705225575%20Large.jpeg)