What does the future really hold for journalism and artificial intelligence AI?

When news emerged several years ago that China's Xinhua news agency was using a virtual news anchor - a move then described as a "global first" in harnessing artificial intelligence (AI) technologies—many saw it as an existential threat to the profession of journalism.

It was recently revealed that the popular tech and electronics website CNET had been publishing dozens of articles generated by AI, but the website had not openly announced such a project.

These articles had been attributed to the byline "CNET Money Staff," giving no indication to readers that the actual author was an AI system, unless they clicked on the name.

This made it appear as though the publishers were attempting to obscure the experiment from public and critical scrutiny and led to concerns about the lack of transparency as well as the potential for job losses among its writers.

There are also serious doubts about the accuracy of the latest AI text generators.

Futurism, another website specialising in science and technology, published scathing criticisms of CNET's articles, stating that they included "very dumb errors" in explaining simple concepts of subjects like finance.

A CNET spokesperson has since confirmed that the site has decided to review all AI-generated articles and make the necessary corrections. In a subsequent statement, Connie Guglielmo, Editor-in-Chief of CNET, defended the website's reputation and credibility.

She announced that a clarification would be added to all relevant articles, to explain that they had been produced with the help of AI but were later revised and edited by the editorial team.

Guglielmo, who said that CNET had published about 75 articles of this kind, described the initiative as an "experiment" aimed at supporting the work of editors.

It might be difficult to distinguish between AI-generated and human-generated content, despite AI's output often being filled with clichés and lacking in emotional resonance, humour and creativity, as the Washington Post points out.

But this only leads us to wonder: What do such experiments hope to achieve? Should we, as journalists, be concerned or excited about these developments? And what can we learn from all of this?

Is Our Perception Shaped by Hollywood?

A horde of robots with glowing brains is breaking through laboratory walls and conquering the world, seizing all jobs and turning humans into redundant beings. This is the Hollywood-inspired image that comes to mind for many, journalists included, when discussing the advancements being made in the field of AI.

Let’s take a look at the history: The term "artificial intelligence" dates back to the 1950s. Although it has since acquired various meanings and now encompasses multiple subfields, there is a consensus on its nature: AI involves the development of computer systems capable of performing tasks that ordinarily require human intelligence.

UNESCO defines AI as enabling "machines to imitate human intelligence in processes such as perception, problem-solving, linguistic interaction, or even creativity."

It learns from data, recognises patterns, and makes judgements with minimal or no human intervention.

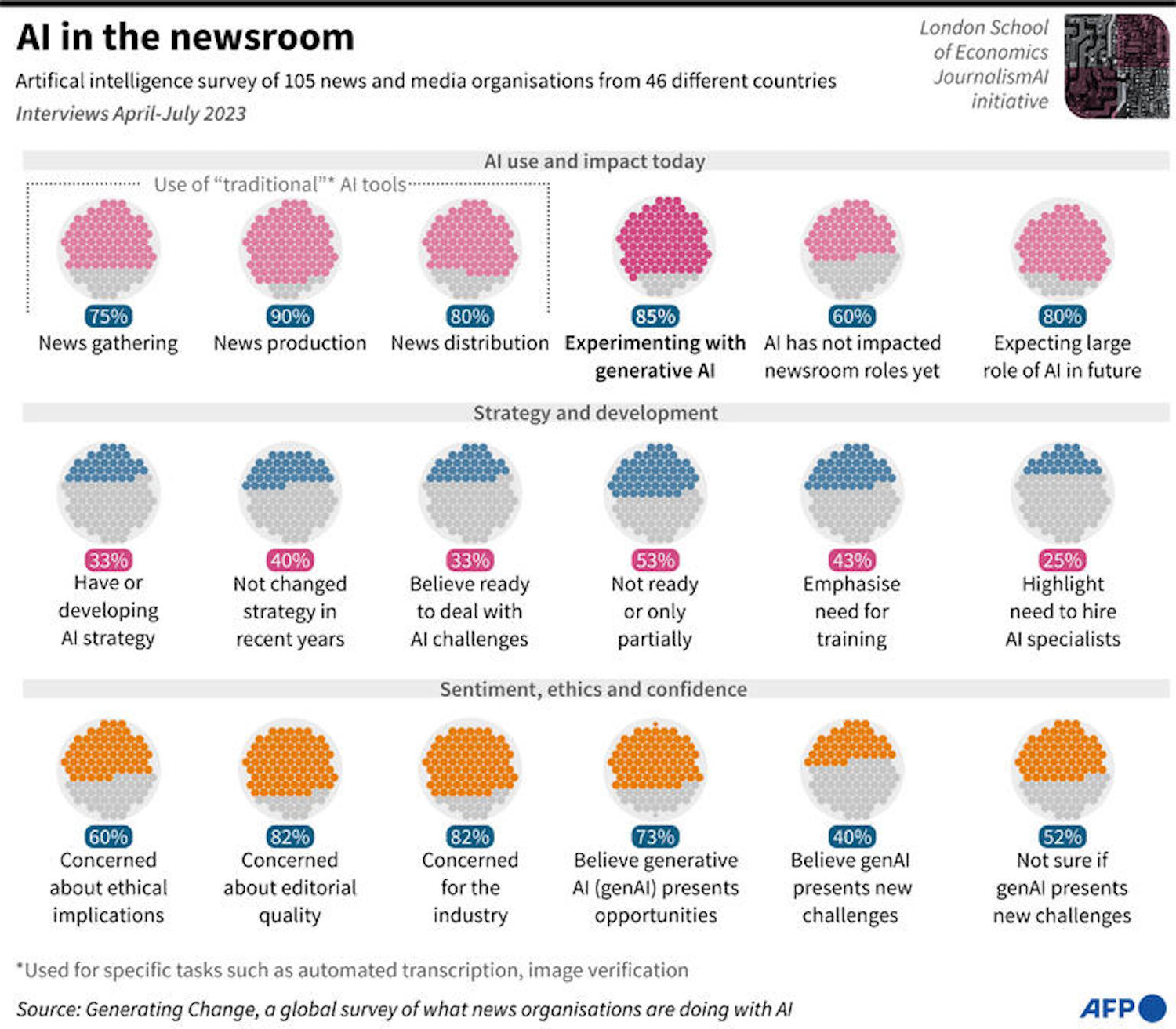

Mattia Peretti, director of the JournalismAI project affiliated with the London School of Economics, points out an essential thing to understand about AI. He argues that there's a distinction between the AI currently available, known as "narrow AI"—computer" programs that can perform a single task extremely well, sometimes better than humans—and the AI usually portrayed in science fiction, labelled "general artificial intelligence".

The latter remains largely a concept of machines that think and operate like the human mind. While efforts continue to develop the latter with flexible behaviours and skills like memory, independent learning, and emotional response, this is not yet a reality.

For this reason, Peretti advises journalists to be more cautious when reading or writing anything that includes the term "artificial intelligence." Terms such as “algorithms” or “specific programmes” might be more appropriate.

Peretti also discusses the importance of understanding that applying AI in any field is not a one-size-fits-all process or a ready-made mould. It demands an understanding of the particular needs of each case and an appropriate strategy for it, including assessing its strengths and weaknesses.

In the end, AI, like any other technological innovation we've encountered before, has the potential to alter the landscape of newsrooms. However, it is ultimately up to humans to decide what they want from AI; after all, machines themselves do not possess ambition or the ability to "steal" jobs in the near future.

How Could AI Affect Journalism?

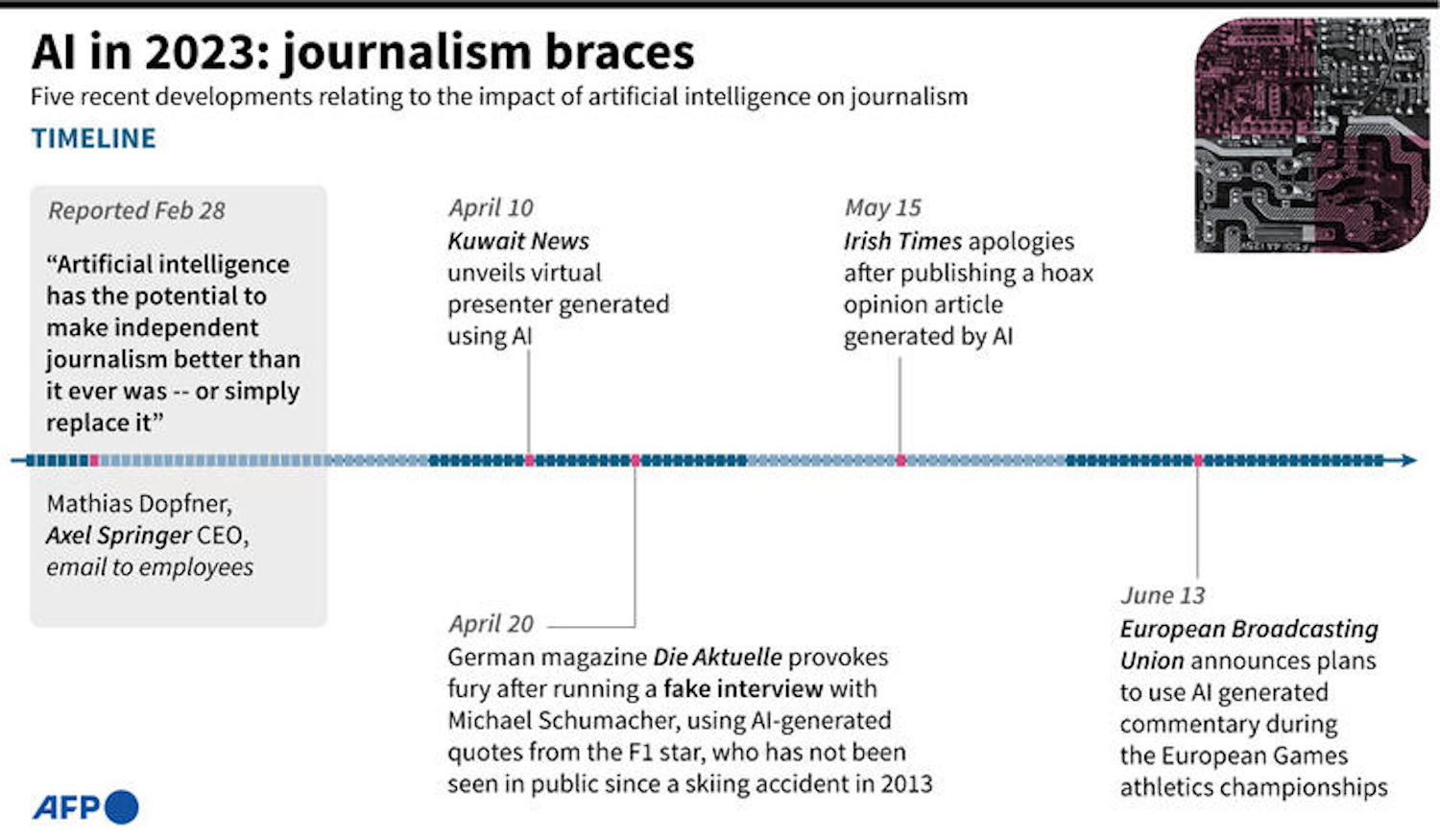

According to a report by the Reuters Institute on trends and forecasts in journalism, media, and technology in 2023, the next wave of technological innovation is already upon us—the continual developments in the field of AI that present an increasing array of opportunities and challenges for journalism.

Among the many discussions in relation to the ways AI impacts newsrooms was a forum held by the Tow Center for Digital Journalism and the Brown Institute for Media Innovation in 2017.

Attendees discussed questions such as: How can journalists use AI to assist in reporting? What roles could AI potentially replace? What are some of the areas in AI that news organisations have not yet capitalised upon? Will AI ultimately become a part of every news story?

The preliminary good news is that there are many indications suggesting that, if used correctly, AI will enhance the work of journalists rather than replace it.

AI is highly capable of performing tedious and repetitive tasks that humans find unenjoyable and that might consume a great deal of a journalist's time, such as transcribing interviews or sifting through daily comments. What is essential is that the human role remains active in this process.

This is particularly important given the knowledge and communication gaps that exist between the engineers who design AI and the journalists who make use of it. Vigilance and care are required from both sides.

This is because AI is inherently unpredictable, which consequently makes it difficult to pinpoint potential problems within it.

Tried and Tested is Best

Perhaps the best starting point towards a deeper understanding of these technologies is to engage in direct experimentation with them, as exemplified by the German radio team from Bavaria who decided to launch a pioneering experiment, establishing a lab focused on using AI to develop investigative stories.

According to Jeremy Gilbert, a professor specialising in digital media strategy at Northwestern University, there are three key areas within journalism where artificial intelligence could have a significant impact:

Data

Journalists today are more burdened with data than ever before, particularly when it comes to investigative projects.

Artificial intelligence tools can assist in sifting through vast volumes of documents and open new avenues for discovering untapped topics. Journalists can also train these systems to monitor the latest trends and issue immediate alerts.

Customising the News Experience

News organisations that have not yet fully embraced digitalisation will inevitably find themselves in a precarious position in the future, struggling to meet the shifting expectations of their audience.

Digitalisation has made feedback loops more effective and helped organisations to get to know more about their audience.

Artificial intelligence is not only capable of determining what a follower knows about a particular subject based on their previous digital behaviour, but it can also use data to provide updates tailored to the follower's needs.

Reimagining the Structure and Content of Stories

Simply finding information, asking questions, writing the story, editing it and then publishing it on social media may not be sufficient in the future. Journalists will need to think more creatively about how to craft various forms of a single story.

Gilbert uses the example of someone posing a question to Alexa, Amazon's smart virtual assistant. They expect a specific and concise answer, not a 1,000-word story that somewhere contains the answer.

Therefore, stories themselves must become answers to the questions posed by news consumers and be designed based on who is asking the question. Clearly, a 35-year-old is looking for a different answer than a nine-year-old. This alternative narrative model can only be achieved using AI.

ChatGPT experience serves as an excellent example of the potential for creating new types of semi-automated content while ensuring efficiency in results.

In the same vein, Mattia Peretti emphasises the potential of using AI to understand, identify and mitigate biases present in newsrooms, to analyse topics that have been insufficiently covered, and even to rethink the basic journalistic product to focus more on the evolving needs and experiences of the audience.

Translation, fact-checking, curating valuable social media contributions, suggesting improvements in phrasing and prioritising breaking news are among the areas where AI appears to have broad prospects.

To this end, media companies are incorporating AI into their operations. Statistics indicate that nearly three out of every 10 companies say artificial intelligence has now become a regular part of their routine activities, while 39 percent say they are conducting experiments in this field.

Promising Experiments

As for inspiration and as mere examples, there are two international news agencies that have achieved pioneering experiences in employing AI, albeit with different approaches. These are Reuters, which develops most of its AI tools in-house, and the Associated Press, which relies on purchasing tools and collaborating with startups.

The New York Times has also made use of AI to assist its journalists in improving phrasing and selecting keywords through a tool known as "EDITOR".

In 2016, The Washington Post adopted an automated robot called "Heliograf" to cover the Olympic Games in Rio de Janeiro, assisting journalists in covering results and tracking medals. It was later developed to be used again to cover US presidential elections and has succeeded in producing more than 500 articles.

The Financial Times has created internal tools to assess human performance and verify whether news stories are biased towards quoting men rather than women.

"The Newsroom", a startup, uses AI to write summaries of the most important news throughout the day, along with providing contextual background for them and supplying links to related stories.

A Journey Full of Pitfalls

AI raises new kinds of questions and ethical issues that could exacerbate the challenges facing the profession of journalism. This means that we need more journalists - not fewer - who are capable of monitoring and reporting on these excesses.

Among the phenomena requiring significant scrutiny are deepfake technology and its association with fraud, blackmail and misinformation.

Plagiarism is also likely to proliferate in the age of AI. This was confirmed by the experience of writer Alex Kantrowitz, who discovered that an article written using AI included plagiarised content from a column he had published just two days earlier.

Given that AI programmes generate their content by searching through readily available information and data, this raises questions about its ability to produce new or original content.

One of the main ethical concerns surrounding the use of AI in journalism is the issue of bias. Algorithms, which are created and fed data by humans, can reflect their biases. Among other issues is the challenge of ensuring that algorithms do not violate human rights in terms of privacy, freedom of choice or perpetuating existing societal stereotypes.

How can we programme values, for example? And how can accountability mechanisms be activated when actions result from fully automated operations?

In an educational video series published by UNESCO, it was stressed that artificial intelligence can obscure legitimate expression of opinions and prevent people from being exposed to diverse viewpoints. It may also contribute to the exacerbation of content manipulation and reduction in media plurality, which has significant repercussions on people's beliefs and behaviours.

In a report by the United Nations Office for Human Rights, an analysis was conducted on how AI impacts people's rights to health, education, freedom of movement, peaceful assembly, association formation and freedom of expression. This analysis threw up multiple cases where individuals were denied social security benefits due to flaws in AI tools, and others were arrested due to errors in facial recognition technologies.

UN Recommendations

In November 2021, all member states of UNESCO adopted a historic convention that establishes the shared values and principles needed to ensure the healthy development of AI.

The recommendations of the convention focused on data protection, prohibiting the use of AI systems for social assessment and mass surveillance, as well as developing mechanisms to understand the impact of AI systems on individuals.

The convention also considers the direct and indirect environmental impact resulting from the lifecycle of an AI system.

The principles for the use of AI within the United Nations system have also been outlined, guiding the design, development, distribution and application of AI.

Based on these principles, a general ethical framework can be derived, which is also applicable to the media sector. However, it is essential to update specific journalistic ethical provisions to align with the characteristics of the current era.

These core principles revolve around the following:

- Avoiding harm: AI systems should not be used in ways that cause or exacerbate harm.

- Justified use: AI systems should be used justifiably and within an appropriate context, not exceeding what is necessary to achieve legitimate objectives.

- Risk management: Risks should be identified, addressed and mitigated throughout the lifecycle of an AI system.

- No deception or rights infringement: AI systems should not be used in a manner that deceives individuals or threatens their rights and freedoms.

- Promoting Sustainability: Any use of AI should aim to enhance environmental, economic and social sustainability.

- Data privacy: The privacy of individuals as data owners should be respected, protected and enhanced throughout the lifecycle of AI systems.

- Preserving human autonomy: AI should not negate human freedom and independence while ensuring human oversight.

- Transparency and impact assessment: Including the protection of whistleblowers.

By focusing on these principles, the convention aims to provide a comprehensive and balanced framework that serves both technological advancement and human well-being.

Translated from Arabic by Yousef Awadh