Generative AI is transforming journalism and journalism education, but this article shows that its benefits are unevenly distributed, often reinforcing Global North–South inequalities while simultaneously boosting efficiency, undermining critical thinking, and deepening precarity in newsrooms and classrooms.

The rapid rise of Generative Artificial Intelligence (GenAI) tools such as ChatGPT, Gemini, and Copilot, and their growing deployment in newsrooms and higher education, particularly within journalism education, has sparked mixed reactions. These range from enthusiasm about innovation to concern over ethics, reliability, and the impact on professional practice. While some applaud GenAI for its potential benefits, including assisting journalists to draft articles, summarise and translate content, among many other uses, others warn that it promotes bias and stereotypes and undermines critical thinking.

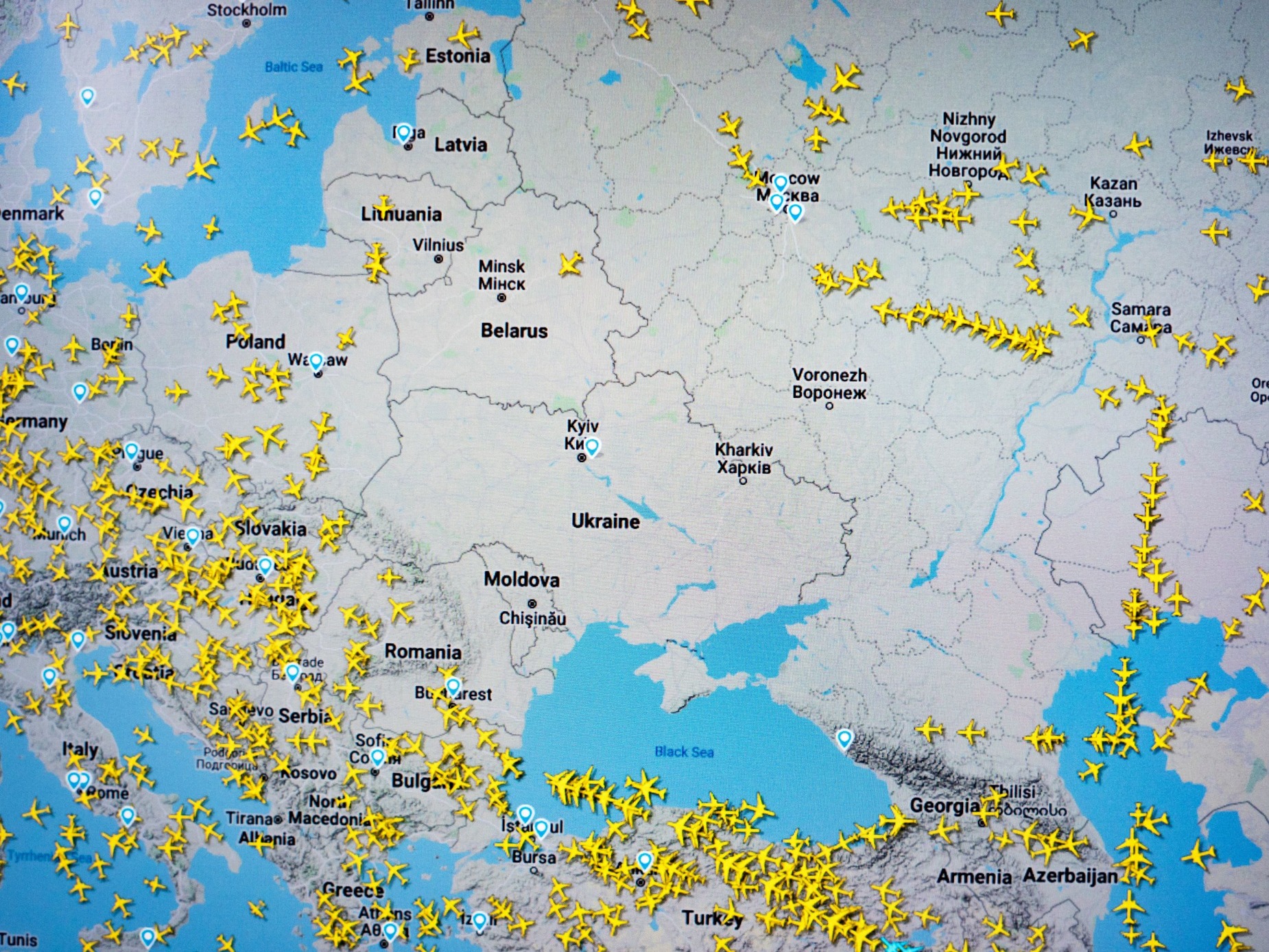

Newsrooms in both the Global North and South are increasingly employing a wide range of GenAI tools for editing, drafting articles, design, data visualisation, news gathering, and research. A Thomson Reuters Foundation report on the adoption of GenAI in the Global South notes that while the technology promises creativity and innovation in newsrooms, “existing narratives about AI adoption are often Western-centric, yet access to this technology differs worldwide, as do the problems faced by journalists and newsrooms” (Radcliffe, 2025).

Since the introduction of ChatGPT in November 2022, journalists and media organisations have raised concerns about hallucinations, the promotion of misinformation, the further erosion of trust in the media, lack of transparency in AI-generated outputs, and the amplification of bias and stereotypes. A 2025 study by the Massachusetts Institute of Technology (MIT) found that users of ChatGPT demonstrated weaker neural engagement, poorer memory, decreased creativity, and more formulaic writing, while those relying on their own reasoning performed better.

In October and November 2025, I conducted a survey with journalists and journalism educators in the Global North and South. The findings revealed mixed responses. Journalists working in Global South newsrooms frequently highlighted how limited access to AI tools and infrastructure, driven by the digital divide, hampers their ability to integrate GenAI into daily reporting. As a result, despite global hype, the use of GenAI in these newsrooms remains minimal. Survey respondents were based in the United Kingdom, Eswatini, South Africa, Zimbabwe, Bangladesh, and Uganda.

Too much attention is paid to how AI will affect producers, and not to how it will affect consumers of journalism. No producer class has a God-given right to a job or to the continued patronage of consumers. We have to produce content that people want, when they want it, and at a price they are willing to pay. If we do not do that, then we will be replaced by machines, and deserve that fate.

Generative AI: Ally and Adversary in the Newsroom and Classroom

Generative AI presents both opportunities and challenges for journalists and journalism educators. Dom Henri (University of Hull), Nigel Francis (Cardiff University), and David Smith (Sheffield Hallam University), in an Advance HE article titled AI: Actual Intelligence – how embedded GenAI can promote the aims of higher education, argue that while GenAI disrupts traditional teaching and assessment models, it also unlocks unprecedented opportunities for innovation. They contend that educators should encourage students to engage critically and thoughtfully with GenAI.

Dr Leone Hawthorne, former world news anchor for CNN International and CNBC Europe, founder and CEO of two UK satellite television channels, and now Senior Lecturer in Artificial Intelligence and Digital Media Production at Cardiff University’s School of Journalism, Media and Culture (JOMEC), teaches students to create dynamic content using GenAI while empoweringthem with critical awareness about the use of Generative AI. His teaching addresses both the creative possibilities and the challenges posed by GenAI, including bias, disinformation, intellectual property rights, and privacy.

In the survey, Dr Hawthorne argued that the GenAI debate should shift from a producer-centric focus to one that considers audiences and consumers of journalism content. He stated:

“Too much attention is paid to how AI will affect producers, and not to how it will affect consumers of journalism. No producer class has a God-given right to a job or to the continued patronage of consumers. We have to produce content that people want, when they want it, and at a price they are willing to pay for it. If we do not do that, then we will be replaced by machines, and deserve that fate.”

GenAI has the potential to foster personalised learning and free up academics to focus on research and community service. However, it can threaten academic integrity, engender biases, and undermine critical thinking, which lies at the core of the humanities and social sciences.

He further argued that media organisations should first address their own systemic problems:

“I have very few ethical concerns about the use of AI in journalism. No more so than general ethical concerns about human journalists. I think the ethics issue has been blown up by Luddites who just want to 'wreck the machines' to save their jobs. To those who worry about this. I would say let's fix the ethical standards in the rest of journalism before we start preaching to Silicon Valley.”

Professor Admire Mare, who teaches in the Department of Communication and Media at the University of Johannesburg in South Africa, noted that while GenAI enables more personalised learning, he expressed serious concern about its role in eroding critical thinking and undermining academic integrity. He stated:

“GenAI has the potential to foster personalised learning and free up academics to focus on research and community service. However, it can threaten academic integrity, engender biases, and undermine critical thinking, which lies at the core of the humanities and social sciences.”

Although AI has become a technological necessity globally, Zimbabwean newsrooms face significant limitations in its adoption due to a lack of resources, training, and infrastructure. There is still very limited use of AI among media houses and journalists.

Professor Mare’s views align with the 2025 MIT study. Similarly, the UNESCO International Bureau of Education argued in a January 2024 article, Critical Thinking and Generative Artificial Intelligence, that critical thinking is a high-order skill essential in everyday life and should be understood broadly, particularly in relation to interpreting what others say, deciding how to respond, and making significant life choices such as career paths and personal relationships.

JenningsJoy Chibike, a media and communication lecturer in the Department of Languages, Media and Communication Studies at Lupane State University in Zimbabwe, raised concerns about the quality of AI-generated journalism, noting that:

“Stories often lack a human element and are poorly contextualised. There are spelling mistakes, and readers sometimes doubt the information provided, even though the creation and scrutiny of journalistic articles is fast.”

When asked about the adoption of GenAI in Eswatini newsrooms, one editor said that GenAI tools had enabled the production of high-quality articles and that journalists should adapt quickly and use the tools more effectively. He stated:

“For me, the main thing is that journalists must learn to adopt tools that make their work easier; this improves the way we work and enhances the quality of our output. When journalists know how to prompt and use these tools properly, what we do becomes much better.”

He acknowledged the risks, adding:

“Of course, the risks are there. Journalism depends on the salience of facts and storytelling. Used incorrectly, AI can lead to repetition and turn journalism into a copycat profession. We must move with the times. Journalists who used typewriters likely felt the same way when computers arrived. That is the same model of innovation. Times change, and we must keep pace.”

Generative AI offers exciting opportunities for journalism, particularly in improving efficiency, data analysis, and creative storytelling. However, misuse risks misinformation and erosion of public trust. For journalists in the Global South, limited access to these technologies and training could deepen existing inequalities in global news production and representation.

A highly experienced journalist with more than 15 years’ experience at a Zimbabwean publication pointed to limitations in GenAI adoption within newsroom production workflows due to economic challenges and the lack of government and private sector support. He argued that while some newsrooms are experimenting with AI presenters, the digital divide remains a major obstacle:

“Although AI has become a technological necessity globally, Zimbabwean newsrooms face significant limitations in its adoption due to a lack of resources, training, and infrastructure. There is still very limited use of AI among media houses and journalists. While some newsrooms, particularly in broadcasting, are experimenting with AI presenters, most continue to rely on conventional journalism practices. Greater government and private sector investment is needed to scale up infrastructure and provide training so that journalists are not left behind as AI becomes more widely implemented.”

A Zimbabwean journalist who also teaches journalism at a local university expressed optimism about GenAI’s potential to promote creativity in storytelling and manage heavier workloads in the context of shrinking newsrooms, while emphasising the importance of transparency:

“Generative AI offers exciting opportunities for journalism, particularly in improving efficiency, data analysis, and creative storytelling. It is especially useful in contexts such as Zimbabwe, where newsrooms are becoming smaller and reporters must shoulder heavier workloads. However, there are valid ethical concerns, including misinformation and the erosion of public trust if AI tools are misused. For journalists in the Global South, limited access to these technologies and training could deepen existing inequalities in global news production and representation. Journalists should therefore declare when Generative AI has been used as a co-author.”

Similarly, in Uganda, a veteran journalist supported GenAI’s benefits, such as enhanced efficiency, while expressing concerns about inequality, job losses, and autonomy. He observed:

“Generative AI can enhance efficiency by automating repetitive tasks and stimulate creativity and productivity through activities such as coding and content production. However, it also raises concerns about loss of human autonomy, societal inequality, and job displacement.”

He further noted that restrictive academic policies limit young people’s engagement with AI:

“In many Ugandan universities, AI is completely forbidden in academic work, particularly report writing. This prevents young people from appreciating its value when their work is rejected because AI has been used.”

References

Kosmyna, N., Hauptmann, E., Yuan, Y. T., Situ, J., Liao, X. H., Beresnitzky, A. V., and Maes, P. (2025). Your brain on ChatGPT: Accumulation of cognitive debt when using an AI assistant for essay writing tasks. arXiv preprint arXiv:2506.08872.

Radcliffe, D. (2025). Journalism in the AI era: A TRF Insights survey. Thomson Reuters Foundation. Available at: https://www.trust.org/resource/ai-revolution-journalists-global-south/

Henri, D., Francis, N., and Smith, D. (2025). AI: Actual intelligence – how embedded GenAI can promote the aims of higher education. Advance HE. Available at: https://www.advance-he.ac.uk/news-and-views/ai-actual-intelligence-how-embedded-genai-can-promote-aims-higher-education

UNESCO International Bureau of Education (2024). Critical thinking and generative artificial intelligence. Available at: https://www.ibe.unesco.org/en/articles/critical-thinking-and-generative-artificial-intelligence

Cardiff University (2025). AI and Digital Media Production (MA). Available at: https://www.cardiff.ac.uk/study/postgraduate/taught/courses/course/ai-and-digital-media-production-ma